Lin Yen-Chen1, Pete Florence2,

Jonathan T. Barron2, Tsung-Yi Lin3

Alberto Rodriguez1, Phillip Isola1

1MIT, 2Google, 3Nvidia

Thin, reflective objects such as forks and whisks are common in our daily lives, but they are particularly challenging for robot perception because it is hard to reconstruct them using commodity RGB-D cameras or multi-view stereo techniques. While traditional pipelines struggle with objects like these, Neural Radiance Fields (NeRFs) have recently been shown to be remarkably effective for performing view synthesis on objects with thin structures or reflective materials. In this paper we explore the use of NeRF as a new source of supervision for robust robot vision systems. In particular, we demonstrate that a NeRF representation of a scene can be used to train dense object descriptors. We use an optimized NeRF to extract dense correspondences between multiple views of an object, and then use these correspondences as training data for learning a view-invariant representation of the object. NeRF’s usage of a density field allows us to reformulate the correspondence problem with a novel distribution-of-depths formulation, as opposed to the conventional approach of using a depth map. Dense correspondence models supervised with our method significantly outperform off-the-shelf learned descriptors by 106% (PCK@3px metric, more than doubling performance) and outperform our baseline supervised with multi-view stereo by 29%. Furthermore, we demonstrate the learned dense descriptors enable robots to perform accurate 6-degree of freedom (6-DoF) pick and place of thin and reflective objects.

Input RGB & Output Masks / Dense Descriptors

6-DoF Grasping of Forks

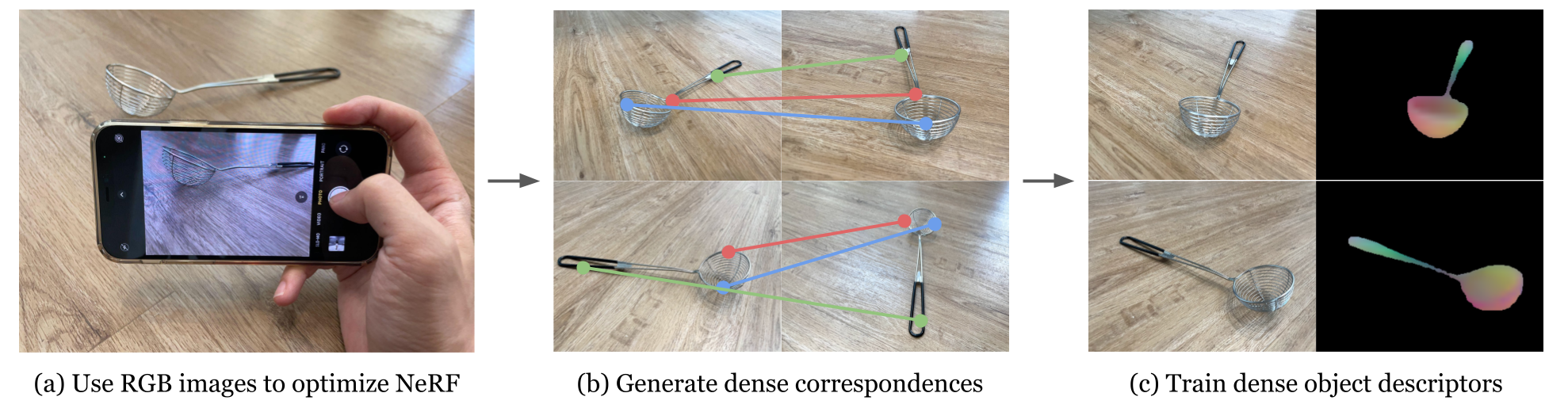

The pipeline consists of three stages: (a) We collect RGB images of the object of interest and optimize a NeRF for that object;

(b) The recovered NeRF’s density field is then used to automatically generated dataset of dense correspondences;

(c) We use the generated dataset to train a model to estimate dense object descriptors,and evaluate that model on previously-unobserved real images.

In the following, we show NeRF's rendered RGB and depth images along with the dense descriptors predicted by our model. The dense descriptors

are invariant to the viewpoint, allowing the robot to track object's parts.

NeRF's RGB

NeRF's Depth

Dense Descriptors

For comparison, we show the mesh reconstructed by COLMAP, a widely used Multi-view Stereo library. The reconstructed mesh has many holes (in blue color) and can't be used to generate correct correspondences for learning descriptors. We also show the pointcloud captured by a RealSense D415, a commonly used RGB-D camera. Again, there exist many holes (black color) and the geometry is wrong.

COLMAP's Reconstruction

RGBD Camera's Pointcloud

We provide an interactive tool to inspect the correspondence dataset generated by NeRF-Supervision. By clicking on a pixel in the left image, the tool will visualize multiple corresponding pixels in the right image. We note that there could be more than one corresponding pixels and their opacities are weighted by densities predicted by NeRFs.

Generalization. We show 6-DoF grasping of objects that are unseen during training.

Strainers

Forks

Robustness. We show 6-DoF grasping with out-of-distribution background.

Strainers